My resume

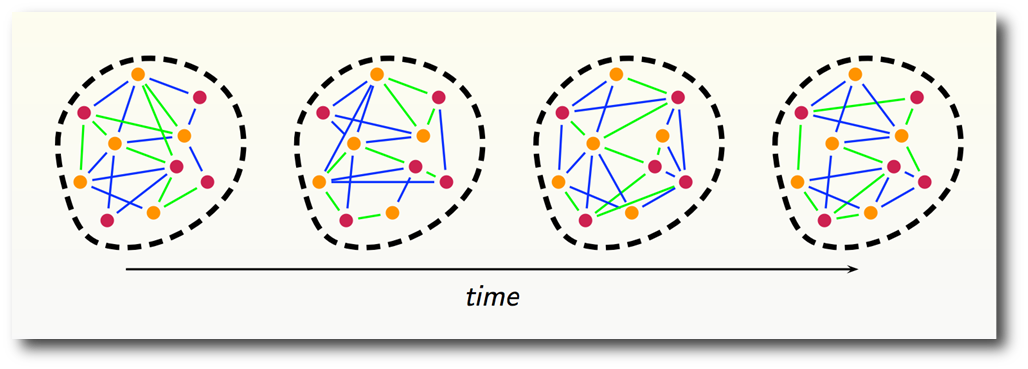

I am a machine learning researcher at Raytheon BBN Technologies. I have worked on computer vision, bioinformatics and statistical analysis problems. I am interested in different approaches to learning from disparate modalities of data collected from different sensors. I am also interested in applications of data fusion to graphs and in general, learning from graph data.

I have a PhD in Applied Mathematics and Statistics from Johns Hopkins University. My PhD advisor was Carey Priebe. We have worked on projects involving data in dissimilarity representation in addition to graphs. My dissertation research involved using dissimilarity data from disparate sources in order to solve learning problems. These type of problems are examples of multiview learning and my approach is a dissimilarity-centric method to find a common representation for the disparate data from different views. An extension of my approach allows the solution of the seeded graph matching problem, a variant of the graph matching problem where a portion of the vertex correspondences are known.

I also have a M.Sc in Engineering from Brown University. I worked in computer vision problems at LEMS lab.

Also at Johns Hopkins (2007-2009), I was a research assistant in the Cardiovascular Research Grid project, developed code for predicting sudden cardiac death or VT/VF events for people implanted with introverter cardiovascular defibrillator(ICD) devices.

In addition to becoming familiar with different statistical methods, I also try to gain as much computing skills as I can. Learning R was an arduous journey and I learned to love R at the end by repeatedly failing and occasionally succeding. See the software section for the R packages I am working on.

I also work with Matlab and Python for scientific computing. Here’s my github page by the way.

I like learning new things and solving practical data problems. My two career goals have been to improve my software development skills and learn about new mathematical methods and approaches. You could also say I am a typeface and design enthusiast.

Like the best of statistics/data science practitioners, I am adept at learning new computer languages.

I used C++ for a long time working with VXL library. I picked up Python during my PhD which I would say is my (current) favorite computer language.

PhD in Applied Math and Statistics, 2014

Johns Hopkins University

M.Sc. in Applied Math and Statistics, 2008

Johns Hopkins University

M.Sc. in Engineering, 2005

Brown University

BSc in Electrical and Electronics Engineering, 2003

Bogazici University

RGraphM is a graph matching R package based on [graphm]http://cbio.mines-paristech.fr/graphm/. Coming soon to CRAN.

Wed, Aug 1, 2012, 8th World Congress in Probability and Statistics

Mon, Aug 1, 2011, Joint Statistical Meetings 2011

Mon, Aug 1, 2011, Joint Statistical Meetings 2011

I have decided to switch to the academic theme for hugo , as it makes much more sense for a researcher. Now I just need to collect all the things I have done over the years.

Content can be written using Markdown, LaTeX math, and Hugo Shortcodes. Additionally, HTML may be used for advanced formatting.

I am in the process of creating my new personal website. I wanted to make it simple and based on a simple design with the option to generate pages without messing with HTML. That is why I am using Herring Cove. This may be overkill for a personal website.

Curated over the years

Advanced R development - GitHub How to improve your R and data analysis skills by doing, asking for help and learning from online sources R, Statet/Eclipse and Latex primer R package for managing data projects

Statet- an Eclipse plugin for R

Robust workflow with StatET + Eclipse (+ SVN + LaTeX + Sweave + BibTeX + Zotero)

R examples: usage of different libraries

github tutorial Development Documentation for a lot of languages C++ code style Python for stats and data analysis Primer explanations of math concepts

Blog post on Neural Networks, and hypotheses on why they work well

For choosing palettes for graphs that are distinct or that have desired properties http://colorbrewer2.org/

A Survey of Free Math Fonts for TeX and LaTeX1

The 100 Best Fonts (in a Huge Sortable Table)

History of neue haas grotesk (aka Helvetica)

One of the best collections of reading (both fiction and non-fiction articles) material for your Pocket: Longform Sites for Learning

Some of the things I have worked on.

ALADDIN : Automated Low-Level Analysis and Description of Diverse Intelligence Video

Prediction of Heart Arrythmias

My thesis project. How to use disparate data with very different representations for inference purposes

An example of linking directly to an external project website using external_link.

The other portion of my disseration project.

DARPA program for organizing big data analysis and visualization efforts.

I taught an intersession course on R programming at Johns Hopkins. Here are my class notes:

Let’s first start Rstudio. This is a good GUI that helps you organize the R expressions(functions called with some arguments) you run, the plots and outputs as a result of those commands.

We have four panes. The upper left is the R script file editor, where you will enter your commands and save them as a text file. The lower left is the R console where you will see the commands sent to R and the results of those expressions. The results of expressions can be stored in variables to be used in future expressions. The upper right shows the variables and the data stored in those variables. The lower right pane has Files,Plots,Packages,Help tabs. -Files will list the files in the current working directory in your computer’s file system -Plots will show you any plots you have asked R to draw. -Help is where you can see information about R functions -Packages: we don’t need to worry about now

We’ll use R to read, modify and analyze data. Let’s start entering some R expressions and see the results.

The most basic way one can think of to organize numeric data is a vector. Let’s create a vector whose elements are numbers by concatenating a few of them. We’ll call “c” function with the numbers as arguments to the function.

#creating a numeric vector

a.vector <- c(2,4,5)

If we need a sequence of integers, we can use double colon operator between the starting integer and ending integer

another.vector <- 2:6

We can concatenate different vectors to get new vectors. a.brand.new.vector <-c(a.vector,another.vector)

For any sequence with regular increments, “seq” is the function to use. It has three arguments the first number , the last number and the interval

a.seq.of.numbers <- seq(0,1,0.01)

If we need to store data as a two-dimensional array , we use “array” function (with number of rows and columns)

an.array <- array(a.seq.of.numbers,dim=c(10,10))

In the “array” function, we make use of the “dim” argument which is a vector of length two(number of rows and number of columns). Note that the functions we call might have possible arguments that we don’t provide. R will assume the values of those arguments are the default values, which you can look up in the help document for that function. To do that, enter

?array

We usually won’t need to look up all these arguments, though, since the default values are sensible.

day.number <- 1:7

What is the size of the vector?

length(day.number)

Suppose the data is not numeric, but is composed of strings(any combination of characters words, sentences, etc.) ( Qualitative data for example). We can store multiple strings in a vector like numbers. We call such vectors “character vectors”. We let R know we’re dealing with characters by putting quotes around them.

#Creating a character vector

#We can store qualitative data as vectors, too. (2.1.1)

day.names <- c("Mon","Tue","Wed","Thu","Fri")

During analysis of data, one often needs to modify some portion of a vector. we use square brackets after the name of the vector and inside the brackets we enter a vector of indices. The elements are indexed starting from 1 so if we want to get the first three elements of “day.names”

# If we want to use only part of a vector, we use indexing to choose

# which elements want

#Numeric indexing

day.names[1:3]

If we want to get the elements with indices 2,4,5 (the numbers we had stored in a.vector

day.names[a.vector]

When we need to access some portion of the two-dimensional array we created

an.array[1:4,1:3]

If we want to edit some elements of vectors and arrays, we can edit them in RStudio. If you’re using Rgui, we can use edit function to open up a spreadsheet interface

edit(an.array)

An array is a two-dimensional array of numbers or strings. But data can be a combination of different kinds of variables. The traditional convention of data organization in spreadsheet format is columns corresponding to different variables or features (qualitative or quantitative) being studied and rows corresponding to different observations of those variables. We will use data frames in R to store data organized like this. A data frame in R is a collection of vectors that all have the same length, but can be of any type (numeric vector, character vector). Each vector forms one column of the data frame. Many datasets that you load into R will be stored in data frames.

# data.frames in R

Let’s create a data.frame that is composed of the name of days and the integer corresponding to that day. “data.frame” function creates a data frame with each supplied argument as one of the columns. Columns can also be named, the names of the columns are supplied when creating the data frame. In the following example, these are “number” and “names” respectively

#creating a data.frame (many datasets will be in this form)

a.data.frame <- data.frame(number=day.number,names=day.names)

This R expression caused an error, because we didn’t have vectors that have the same length. Let’s fix this

day.names <- c(day.names,"Sat","Sun")

a.data.frame <- data.frame(number=day.number,names=day.names)

The indexing for data.frames is like arrays:

#Indexing for data frames

a.data.frame[1:3,]

a.data.frame[,2]

One can also access specific columns by using the name of that column, which will return the vector in that column

a.data.frame$names

And we can access some portion of that vector like any vector

a.data.frame$names[1:3]

The variables in data can also be logical variables, that is they take one of the values TRUE or FALSE. Logical vectors that store this kind of data are very useful, especially in indexing.

# A vector can be composed of logical values, too(TRUE or FALSE)

a.logic.vector<-c(TRUE,FALSE,TRUE,FALSE,FALSE,FALSE,FALSE)

If we use one of the comparison operators (“<”,”>”,”<=”,”>=”,”==”) with other type of vector, the result will be a logical vector whose elements are TRUE or FALSE. The value in the logical vector will be TRUE, when the comparison is true.

day.names==”Mon”

day.names==”Tuesday”

day.number<3

If we wanted to get some specific elements of a vector, say “a”, we can use logical vectors that have the same length as index vectors to extract those specific elements. The elements of “a” that will be extracted are the ones which have TRUE values in the logical vector used for indexing

#logical indexing (3.3,3.4)

day.names[a.logic.vector]

# Count the number of TRUEs in the logical vector

sum(a.logic.vector)

The logical indexing is very useful, when you want to extract some portion of the vector that satisfies a particular condition. Let’s look at an example

This function will load a dataset that’s built into R. This dataset includes a vector with the name nhtemp. It will be loaded with the name nhtemp. It’s the yearly temperature record from New Haven.

data(nhtemp)

One can use ls() function to list all the variables that are currently loaded into R

ls()

nhtemp is an R variable that’s slightly different than a vector, so we first want to turn into a regular vector.

nhtemp <- as.vector(nhtemp)

If we want to get temperature records that are higher than 54 degrees, we can use the logical vector

nhtemp>54

nhtemp[nhtemp>54]

If we want to get the indices of the TRUE values in logical vectors, we use the “which” function

which(nhtemp>54)

Now we can use plot functions to plot this series of tempearatures If we use only one argument in the plot function,

plot(nhtemp)

If we want to plot x-y plot (temperature against the years

plot(1912:1971,nhtemp)

plot(1:60,nhtemp,type='l')

These are the summary statistics functions in R

mean(nhtemp)

median(nhtemp)

max(nhtemp)

min(nhtemp)

var(nhtemp)

sd(nhtemp)

sqrt(var(nhtemp))

summary(nhtemp)

For plotting histograms, we use hist function with different

hist(nhtemp)

hist(nhtemp,breaks=14)

hist(nhtemp,breaks=seq(min(nhtemp),max(nhtemp),0.5))

boxplot(nhtemp)

smokes = c("Y","N","N","Y","N","Y","Y","Y","N","Y")

amount = c(1,2,2,3,3,1,2,1,3,2)

a.table <- table(smokes,amount)

prop.table(a.table)

chisq.test(a.table)